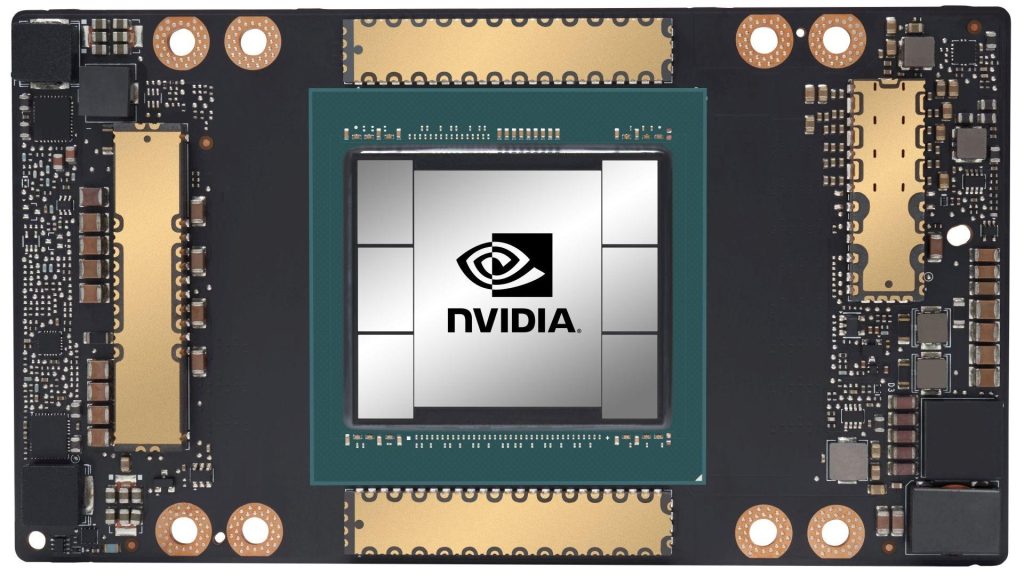

NVIDIA’s H100 Tensor Core GPU, A powerhouse for AI and HPC

The NVIDIA H100 Tensor Core GPU is a monster in the world of computer chips. Here’s a breakdown of its key features and what makes it so powerful:

Built for Speed:

- Manufactured using a cutting-edge 4nm TSMC process, the H100 boasts a whopping 80 billion transistors. This translates to significantly faster processing compared to its predecessor, the A100.

- Memory Matters: The H100 supports both HBM3 and HBM2e memory options, offering up to 80GB of memory bandwidth. This high-speed memory allows the chip to access data much faster, crucial for complex AI tasks and high-performance computing (HPC).

- Introducing Hopper Architecture: The H100 is powered by NVIDIA’s latest Hopper architecture, specifically designed for accelerating AI workloads. This architecture includes numerous advancements that optimize performance for tasks like training and running AI models.

AI Powerhouse:

- The H100 truly shines in the realm of Artificial Intelligence. It features enhanced Tensor Cores, specialized processing units designed for AI computations. These advancements allow the H100 to train AI models significantly faster than previous generations of GPUs.

- Automatic Inline Compression: This innovative feature allows the H100 to compress data on the fly, maximizing memory utilization and further boosting performance for AI tasks.

Beyond AI:

While the H100 excels at AI, its capabilities extend to other demanding applications:

- High-Performance Computing: Scientific simulations, engineering tasks, and other computationally intensive workloads benefit from the H100’s raw processing power and memory bandwidth.

- Data Centers: The H100 is designed for data center environments, allowing businesses and organizations to leverage its power for large-scale AI projects and complex data analysis.

Not for Everyone:

The H100 is a powerful chip, but it comes with a price tag to match. Due to its high cost and specialized capabilities, it’s primarily targeted towards data centers, research institutions, and companies heavily invested in AI development.

The speed improvements of Blackwell chips from Nvidia depend on the specific task being performed:

- AI Training: Blackwell GPUs are advertised to be up to 4 times faster than their predecessor, the Hopper architecture chips, for training AI models.

- AI Inference: For tasks like running AI models to make predictions based on trained data, Blackwell boasts speeds that are up to 30 times faster than Hopper chips.